There are several best practices that can be used to prevent CAPTCHAs when web scraping:

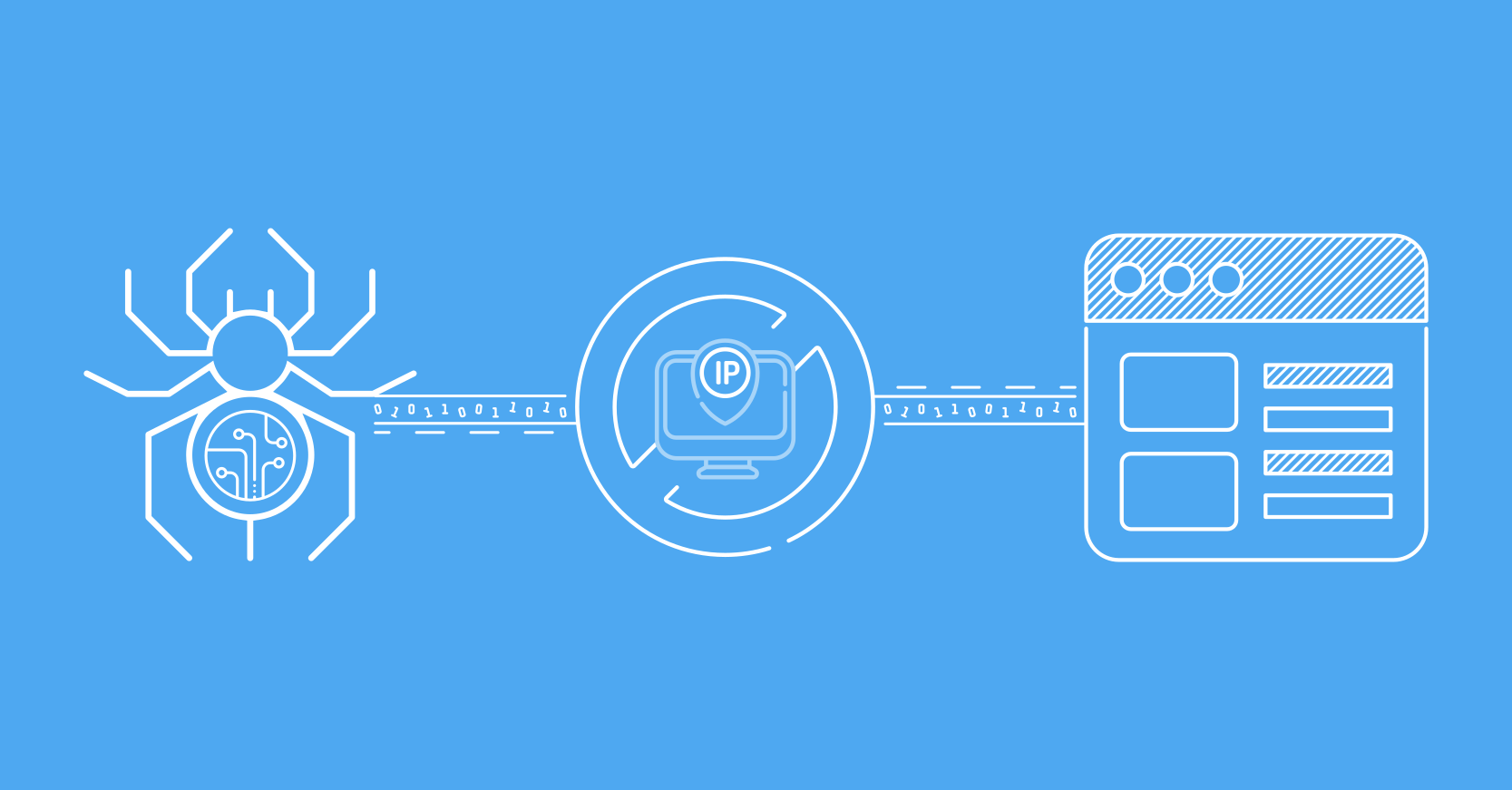

- Use a web scraping library that allows you to rotate through a list of proxies and user agents. This can help to prevent your IP address from getting blocked.

- Use a headless browser for web scraping, such as Selenium or Puppeteer. This can help to mimic the behavior of a real user and make it less likely for the website to detect that you are a scraper.

- Limit the number of requests made to the website in a given period of time. This can help to prevent the website from detecting that you are a scraper and blocking your IP address.

- Use a CAPTCHA solving service to automatically solve CAPTCHAs as they appear.

- Use cookies and sessions to maintain your scraping sessions as a real user.

- Regularly check the website’s terms of service and robots.txt file to ensure that web scraping is allowed.

- Use Anti-Bot or anti-scraping techniques like Cloudflare, reCAPTCHA, hCaptcha, etc.

- Use a random delay between requests to mimic a human browsing pattern and avoid detection.

- Scrape during off-peak hours to avoid detection.

- Do not scrape sensitive information like personal data, email addresses, and phone numbers as they are more likely to trigger CAPTCHAs.

- Consider using a VPN or a rotating proxy service to mask your IP address and make it harder for the website to detect that you are a scraper.

- Monitor your scraping activities and check for any suspicious behavior that might trigger CAPTCHAs.

- Use scraping software that has built-in anti-CAPTCHA features, such as rotating IP addresses, random user agents, and browser fingerprints.

- Use a browser extension like Privacy Pass to bypass the CAPTCHA, if the website uses hCaptcha.

- Try to scrape only the publicly available information on the website, as private information is more likely to be protected by CAPTCHAs.

- Use a tool like Google cache to scrape the website, as it is less likely to have the same CAPTCHA protection as the live website.

- Consider using web scraping as a service companies that have dedicated teams and advanced tools to bypass CAPTCHAs and other anti-scraping measures.

- Be mindful of the legal issues surrounding web scraping, as some websites may take legal action against scrapers who violate their terms of service or other policies.

- Use a browser extension like ‘User-Agent Switcher’ to change the user-agent of the browser, which will make it difficult for the website to detect that you are a scraper.

- It’s a good idea to use a combination of the above techniques to ensure that you can scrape the website successfully without getting blocked by CAPTCHAs.

- Use a CAPTCHA solving service that uses OCR to recognize the CAPTCHA image and return the text.

- You can also use a CAPTCHA solving service that uses a team of human workers to solve CAPTCHAs manually.

- Use scraping tools that have built-in CAPTCHA solving capabilities, such as Scrapy-Captcha and Scrapy-Anti-Captcha.

- Keep in mind that some websites may use CAPTCHAs that are difficult to solve, such as reCAPTCHA v3, which uses a score-based system to detect bots. In such cases, it may be more challenging to bypass the CAPTCHA and you may need to use advanced techniques.

- Use CAPTCHA solving services that can be integrated into your scraping code, so you can automatically solve CAPTCHAs as they appear.

- Use CAPTCHA solving services that can bypass the CAPTCHA by using machine learning algorithms.

- Use CAPTCHA solving services that can bypass the CAPTCHA by using image recognition techniques.

- Try using a different browser with different configurations, such as disabling JavaScript, cookies, and other features that might trigger CAPTCHAs.

- Use a browser extension like ‘NoScript’ to block scripts that might trigger CAPTCHAs.

- Lastly, it’s always a good idea to keep your scraping tools and libraries updated with the latest features and security patches to ensure that they are not easily detected by websites.

It’s important to note that while these techniques can help prevent CAPTCHAs while web scraping, they may not always be 100% effective, and it’s always important to comply with the website’s terms of service and any legal requirements.

It’s important to note that some websites use sophisticated anti-scraping measures, and it may not always be possible to bypass them. In such cases, it’s best to seek alternative sources of data or consider other methods of data collection.